Overview of Docker Workflow

Docker is a powerful tool that helps developers package, share, and run applications consistently. For beginners, it’s a great way to learn how applications are deployed and run without worrying about the environment setup. By understanding Docker’s workflow lifecycle—images, containers, networking, storage, and multi-container management—you can simplify your development process. Let’s break it down step by step in an easy-to-understand way.

In this section, we’ll cover the following topics:

- Introduction to the Docker Workflow Lifecycle

- Docker Image Lifecycle

- Docker Container Lifecycle

- Docker Network and Storage

- Multi-Container Management with Docker-Compose

Introduction to Docker Workflow

Docker helps developers by providing a consistent way to build and run applications, regardless of where they’re deployed. Here are the key ideas to understand:

- Docker Images and Image Registry: Think of images as the blueprint for your application. These blueprints are stored in a library called a registry, like Docker Hub, where you can find pre-built images.

- Docker Containers: Containers are like actual houses built using the blueprint (image). They’re lightweight and portable, running your application in an isolated environment.

- Docker Network and Storage: Containers can communicate with each other through networks. Storage is used to save data so it doesn’t disappear when containers stop.

- Docker Compose: If your application is made up of multiple parts (e.g., a web app and a database), Docker Compose helps manage all those parts easily.

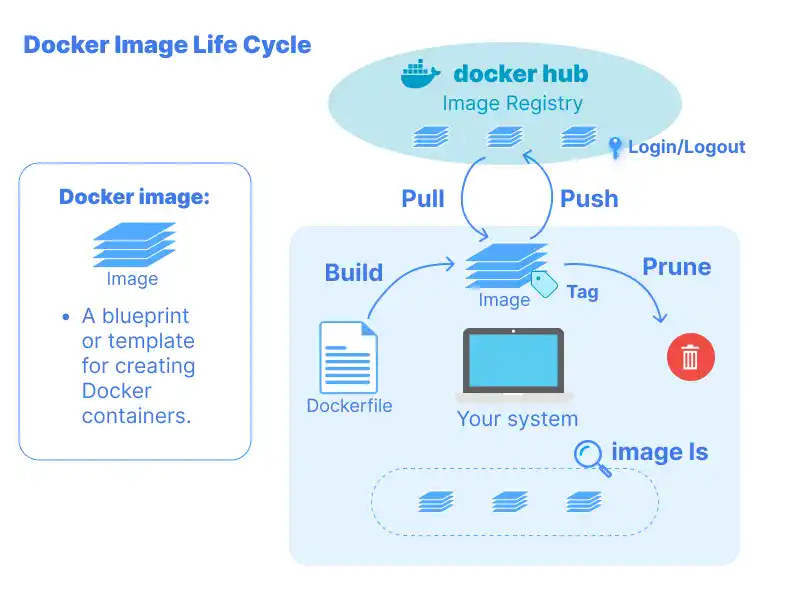

Docker Image Lifecycle

Docker images are the starting point of the Docker workflow. They are created, used, and sometimes removed or shared. We’ll go deeper into these details in Chapter 3 and Chapter 5.

Using Docker Images from Image Registry (Docker Hub)

Docker Hub is a place where developers can find ready-to-use images. For example, if you need a Python environment, you can pull a Python image from Docker Hub instead of building it from scratch. This saves time and ensures reliability, as these images are maintained by the community or trusted sources.

Checking and Removing Images

Docker images can pile up over time, especially if you’re experimenting or updating frequently. Unused images take up disk space, so it’s important to remove them regularly. Cleaning up ensures your system stays fast and organized. Docker makes it easy to see which images you have and delete those you no longer need.

Building Custom Docker Images

Sometimes, you’ll need to create your own image tailored to your application. This involves writing a Dockerfile, which is like a recipe telling Docker how to build the image. You can specify things like the operating system, software dependencies, and configuration files.

Sharing Docker Images

Once you’ve built an image, you can share it with others by pushing it to Docker Hub or a private registry. This is great for team collaboration, as others can pull the image and run it without needing to recreate it.

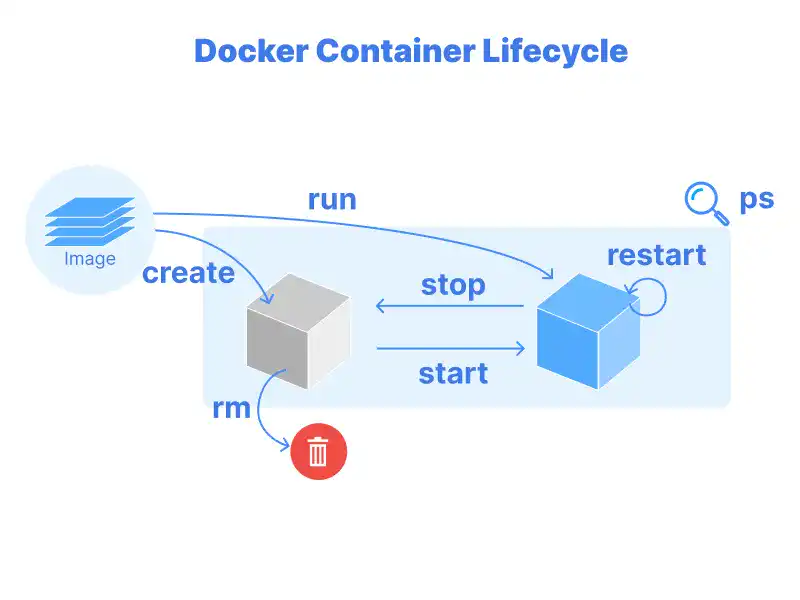

Docker Container Lifecycle

A container is the running version of your image. It’s where your application lives and works. Understanding the container lifecycle—creation, operation, and deletion—is key to using Docker effectively. Details about this lifecycle will be explained in Chapter 3.

Creating and Running Containers

Creating a container is like starting a new instance of your application. You can customize how it runs by specifying things like which port to use or any special settings your app needs. Containers are fast and efficient, making them ideal for testing and deploying applications.

Pausing, Stopping, and Restarting Containers

Sometimes you need to pause or stop a container without deleting it. For example, you might pause a container while debugging or stop it temporarily during maintenance. Restarting a stopped container brings it back to life quickly, without needing to recreate it.

Scaling and Managing Containers

If your application needs to handle more traffic, you can scale it by running multiple containers at the same time. Tools like Docker Compose or Kubernetes make it easy to manage large numbers of containers, ensuring they work together seamlessly.

Deleting Containers

Once you’re done with a container, it’s a good idea to delete it to free up resources. Regular cleanup helps keep your system organized and ensures it performs well.

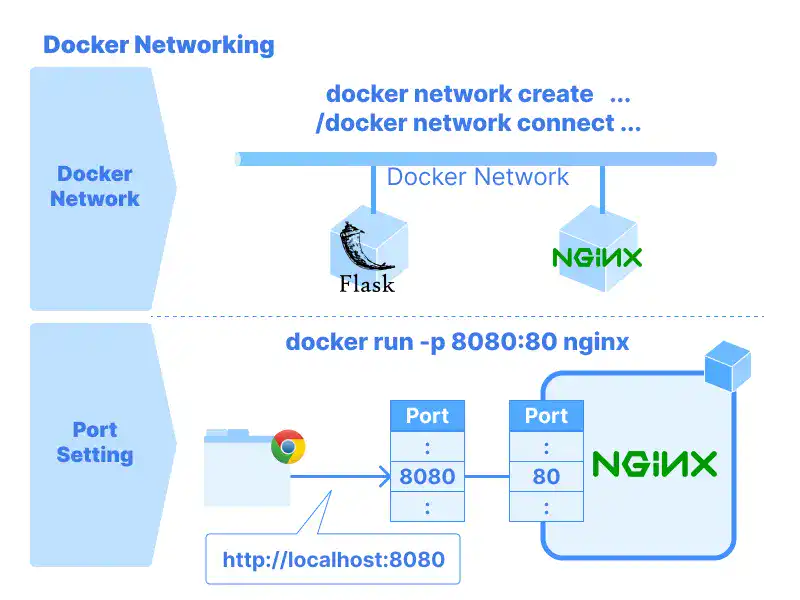

Docker Network and Storage

Networking and storage are essential parts of running containers. They allow containers to communicate and ensure important data isn’t lost. We’ll go into more detail about these topics in Chapter 4.

Managing Docker Network

Docker networks let containers talk to each other or connect to the outside world. For example, if your web app container needs to send data to a database container, they can communicate over a Docker network. You can use default networks like bridge or create custom ones for more control.

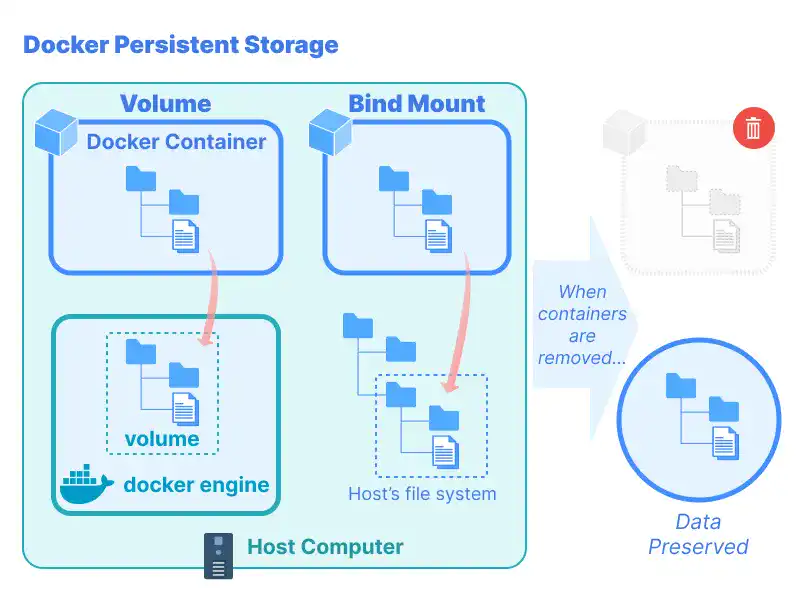

Managing Docker Storage and Data Persistence

By default, data inside a container disappears when the container stops. To avoid losing important data, you can use volumes or bind mounts. These storage options allow data to persist even if the container is deleted or restarted, making them crucial for applications that rely on databases or user-uploaded files.

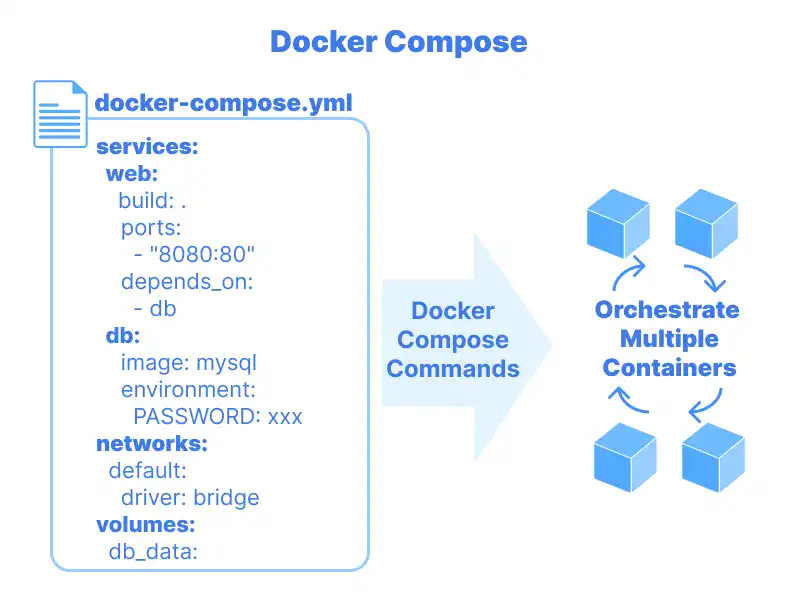

Multi-Container Management with Docker-Compose

Applications are often made up of multiple parts. For instance, an e-commerce app might need a web server, a database, and a caching service. Managing these manually can be complex, but Docker Compose simplifies the process. We’ll cover this in more depth in Chapter 6.

Importance of Managing Multiple Containers

When applications are split into multiple containers, each container focuses on a specific task. For example, one container might handle web traffic while another stores data in a database. Managing these individually can be tedious, but using a tool like Docker Compose keeps things organized.

Utilizing Docker-Compose

Docker Compose allows you to define all the parts of your application in a single configuration file. This file includes details about each container, like the image it uses, the ports it exposes, and how it connects to other containers. With one command, you can start or stop the entire application stack, saving time and reducing complexity.

By learning the Docker workflow lifecycle, beginners can gain confidence in building and running applications efficiently. Docker’s tools and concepts might seem overwhelming at first, but breaking them into manageable steps makes them accessible. Mastering images, containers, networks, and multi-container setups will set you up for success in modern software development.

FAQ: Overview of Docker Workflow

What is Docker and why is it useful for developers?

Docker is a tool that helps developers package, share, and run applications consistently across different environments. It simplifies the deployment process by eliminating environment setup issues.

What are Docker images and how are they used?

Docker images are blueprints for applications, stored in registries like Docker Hub. They can be pulled and used to create containers, ensuring consistency and reliability in application deployment.

How do Docker containers differ from images?

Containers are the running instances of Docker images. They provide an isolated environment for applications, making them lightweight and portable.

What role do networks and storage play in Docker?

Docker networks allow containers to communicate with each other, while storage options like volumes ensure data persistence even when containers are stopped or deleted.

How does Docker Compose help in managing multi-container applications?

Docker Compose allows you to define and manage multiple containers in a single configuration file, simplifying the process of starting and stopping complex application stacks.